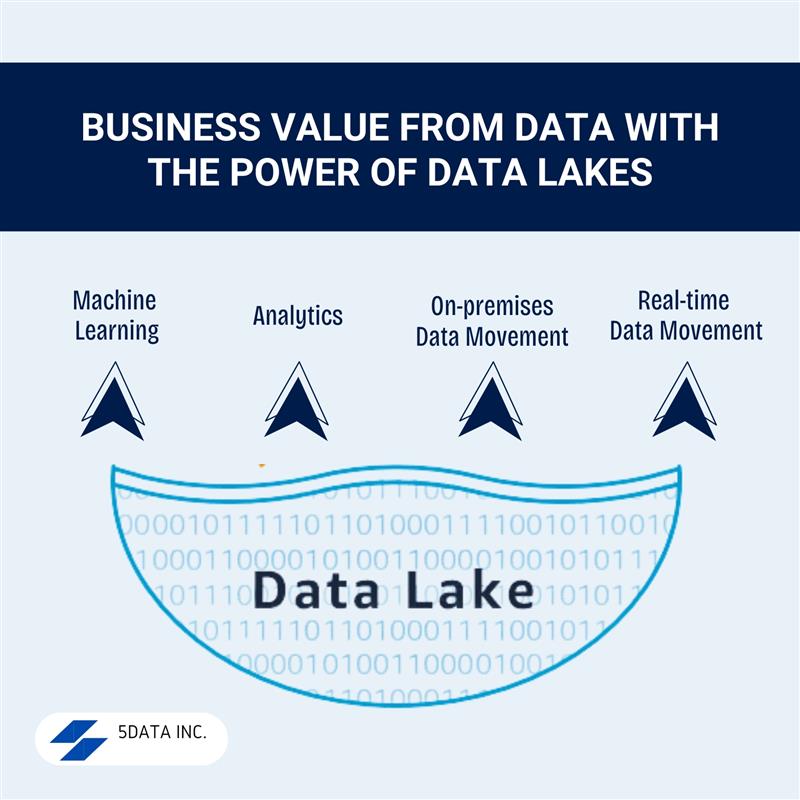

In contrast to most databases and data warehouses, data lakes can process all data types, including both unstructured data and semi-structured data like images, video, audio, and documents. This is essential for today’s machine learning and advanced analytics use cases.

The use of scalable and cost-effective storage solutions for data pipelines, such as cloud storage, makes it feasible to store large volumes of raw data without incurring excessive costs. Additionally, data lakes seamlessly integrate with data processing tools, facilitating the transformation of raw data into a usable format for analysis.

Key Takeaways

- A data lake serves as a system or repository for storing data in its original, raw format, typically in the form of object blobs or files.

- A data lake is a centralized repository for various types of data used for reporting, visualization, analytics, and machine learning.

- Raw data in data lakes can be stored in structured or unstructured formats, making them a cost-effective solution for data storage.

Understanding Data Lakes

In the data world, a data lake serves as a centralized repository that seamlessly stores raw data in its original form. This approach offers a scalable and flexible solution for data storage and analytics. Data lakes are specifically engineered to manage diverse data formats and structures, making them a prime option for big data analytics. Unlike traditional data warehouses distributed file systems, data lakes operate without the need for a predefined schema, allowing for enhanced flexibility and adaptability.

Data Lake Architecture

A data lake’s architecture is carefully structured to store extensive data while enabling efficient, sophisticated processing and analytics. The key components of a data lake encompass storage, processing, management, and data ingestion. The versatile data catalog framework of modern data architectures can be implemented in the cloud, a hybrid environment, or on-premises, depending on the organization’s specific needs.

Working With Raw Data In A Data Lake

Data lakes are designed to store raw data in its original format, providing data scientists and engineers with the flexibility to access and analyze the data as needed. They can process and transform raw data using tools like ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform), offering a scalable solution for advanced analytics and machine learning.

Ensuring Data Quality in a Data Lake

High data quality is essential for ingesting data used in a data lake to prevent inaccurate insights and decisions. Data management and governance practices uphold data quality and integrity in data lakes. Utilizing data quality metrics and monitoring tools can effectively identify and tackle any data quality issues within a data lake.

Data Lake For Advanced Analytics

Data Lake offer an extensible and flexible solution for advanced analytics, encompassing predictive analytics and machine learning. Their capability to manage large volumes of diverse data positions them as an ideal choice for a variety of advanced analytics use cases. Additionally, data lakes can be easily integrated with versatile analytics tools and platforms, such as data warehouses and businesses, through data collection and data management service providers‘ intelligence platforms for efficient data management and enhancing their effectiveness and versatility.

Machine Learning In A Data Lake Environment

Applying machine learning algorithms to raw data stored in a data lake can yield valuable insights and predictions. Data lakes present a dynamic and scalable solution for machine learning, providing data scientists and engineers with data access to the means to access and analyze extensive datasets. Machine learning improves data quality, identifies anomalies, and uncovers patterns within a data lake.

Data Lakehouse: A Hybrid Approach

A data lakehouse integrates the strengths of data lakes and data warehouses, offering a flexible and scalable solution for data storage metadata management and analytics. It also incorporates robust data management and data governance practices, making it ideal for supporting advanced analytics and machine learning use cases. Additionally, it provides a unified and reliable data source.

Hybrid Cloud Options For Data Lakes

Hybrid cloud options for data lakes present a scalable and unique solution for data storage and analytics. These options effectively support advanced analytics and machine learning use cases while delivering a cost-efficient data storage solution. Moreover, these hybrid cloud choices seamlessly integrate with various metadata management tools and platforms, including data warehouses and business intelligence platforms.

Measuring Success And ROI

Establishing a comprehensive metrics and monitoring framework is important to measuring success and ROI effectively in a data lake environment. Key performance indicators (KPIs) and data quality metrics offer valuable insights into a data lake’s performance and data security. At the same time, ROI analysis helps assess its cost-effectiveness and storage costs and identify opportunities for enhancement.

Future Trends And Technological Advancements

Innovations such as artificial intelligence, edge computing, and blockchain technology will positively impact the future of data lakes. Data lake platforms are poised to play a vital role in advancing analytics and machine learning use cases while also providing a scalable and adaptable solution for data storage. As data lakes continue to develop, there will be a dedicated focus on enhancing data management and governance practices across diverse data sources. Data Life Cycle Management Services help combine both data lakes for data analysis.

Conclusion

Data lakes are a powerful and adaptable solution for advanced analytics and machine learning, providing an excellent option for organizations aiming to harness big data for competitive advantage. They come with a variety of advantages, such as enhanced data quality, greater scalability, and improved analytics capabilities. By gaining a deep understanding of the benefits and challenges of data lakes, organizations can confidently decide how to utilize data lakes to propel their business objectives forward effectively.

Frequently Asked Questions

What is leveraging machine learning?

Machine learning plays a significant role in analyzing vast datasets, which are essential for businesses as they expand operations and gather valuable information. Leveraging machine learning can streamline the data collection process, making it more efficient and effective.

What is an analytics data lake?

Data lakes play a crucial role in enabling businesses to efficiently process and manage large volumes of data while ensuring scalability and security. They are seamlessly integrated with a wide range of analytical tools, unlocking extensive data potential. This integration facilitates the analysis of real-world data, directly contributing to the development of predictive algorithms. Databricks SQL and AWS Analytics services support direct queries, empowering strategic decision-making with valuable insights.

What is the difference between ETL and data lake?

The Extract, Transform, Load (ETL) software is essential for cleansing filters and organizing data structures before extraction. This process ensures a clean and well-structured data lake, providing flexibility for pre-processing operations. Many organizations rely on extraction tools such as ELT (Extraction, Loading, and Transcoding) to streamline these processes.

What is leveraging machine learning?

Data lakes have evolved beyond mere repositories of raw data, now functioning as central hubs that support business intelligence with the growing volume and variety of data. Additionally, machine learning, a subset of artificial intelligence, has emerged for analyzing large data sources.